3. JTI - Production

Goals

In the previous exercises, you have learned how to develop your application locally. Now, it's time to take the next step and push the code to production. This includes:

- Setting up the infrastructure.

- Creating production versions of your Docker and Compose files.

- Setting up a pipeline for CI/CD that deploys the application on a staging and production server.

Prerequisites

By now, you should have an application with Docker and Docker Compose files in a repository at gitlab.lnu.se.

Ensure you watch or follow along in Demo #6.

1. Setting up the infrastructure

The application will be deployed to a server in Open Stack with Docker (runtime) and Nginx (reversed proxy) installed.

You've already learned how to accomplish this in the course! Utilize the scripts and knowledge you've acquired to finish this step. The requirements for the infrastructure are provided below.

We strongly recommend you to work with this for your self. However, we have prepared an infrastructure project that you are allowed to use if you want to. Note! Make sure to understand the project if you are using it. (This project is using cloud-init instead of Ansible.)

1.1 Requirements

The code for creating the infrastructure will be version controlled in a separate project called "infra" or "infrastructure".

1.1.1 Infrastructure

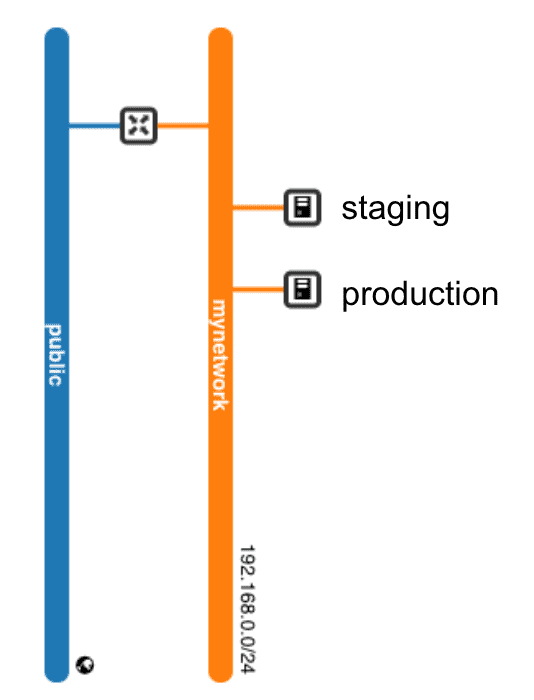

We need an infrastructure with the following:

- Router

- Network

- Security groups for

- SSH: 22

- HTTP: 80

- HTTPS: 443

- 2 instances

- staging

- production

1.1.2 Provisioning

System

- Ubuntu 20.04

- Patched and updated

NGINX

- Listen and forward traffic from :80 to :443

- Listen on :443

- SSL Certificate, Self-Signed or using a provider like letsencrypt

Docker

- Installed and running

- ubuntu user in docker group

Confirm the infrastructure

Hopefully, if all is well, you should have the two provisioned servers up and running. Confirm by visiting :443 in the browser. They should return a bad gateway since the applications are not started.

Troubleshooting

Check the status in CSCloud.

SSH into the machines and look at logs.

What is the NGINX status?

2. Docker and Docker Compose

Now, switch to the application project.

You have already created Docker and Docker Compose files. However, these are optimized for local development and not production. Now, you need to:

- Create a Dockerfile for production

- Create a Docker Compose structure for production and development

2.1 Dockerfile

Create a new Dockerfile named Dockerfile.production and make it production-ready.

The main differences between Dockerfile and Dockerfile.production:

npm ci --omit=devto not install dev dependencies.Start by:

CMD ["node", "src/server.js"]

Read more: Dockerizing a Node.js web app

2.2 Docker Compose

Docker Compose files are composable. You can provide many files and they will all merge together where the last file has the highest priority. Because of this, create two new files:

docker-compose.development.yamldocker-compose.production.yaml

In the original docker-compose file, include everything that is common in both development and production. This includes:

- For the mongodb service

- The container name

- The image beeing useed

- For the just-task-it service

- The container name

- build context

- environment variables

- depends on mongodb

- port mapping

In the docker-compose-development.yaml file, specify:

- volumes for development

- use .env-file for evironment variables

In the docker-compose-production.yaml file, specify:

- volume for MongoDB in production (no need for volumes for taskit).

- NODE_ENV=production

- That you want to use

Dockerfile.productionwhen building taskit.

Example project - Dockerized Web Application

Environment variables

For convenience, most of the environment variables are defined in the docker.compose.yaml file. However, for development purposes, we choose to specify some in the .env file. Later, these will be set in GitLab for staging and production environments.

See Example project - docker.compose.yaml for reference.

In our .env-file, we need to specify at a minumum:

Confirm production files

You can test your production files by executing them locally but instructing Docker to run them at your staging server.

Docker will look for the environment variable DOCKER_HOST. If found, Docker will execute its commands against that host. You should be able to:

MacOS and Linux

Windows

...where you need to change the IP to the IP of your staging server. In this case, you also need to add your private SSH key to the SSH agent using the ssh-add command.

Don't proceed until you get everything to work. (It's a slower process to troubleshoot using the a GitLab pipeline.)

3. Deploy trough a pipeline

When everything works, you want to be able to deploy your code when it is pushed to GitLab. There are many different branching strategies to choose from, and you should probably have the main branch protected so that you always merge it through a Merge Request that can be audited and approved before the code is pushed to production. However, you will start by just making sure that new commits to main will be automatically deployed to the staging server, and manually deployed to the production server.

3.1 The .gitlab-ci.yml file

To create a pipeline on GitLab, simply create a file named .gitlab-ci.yml and place it in the root of your project. (Doing this in the web UI of GitLab is pretty neat.)

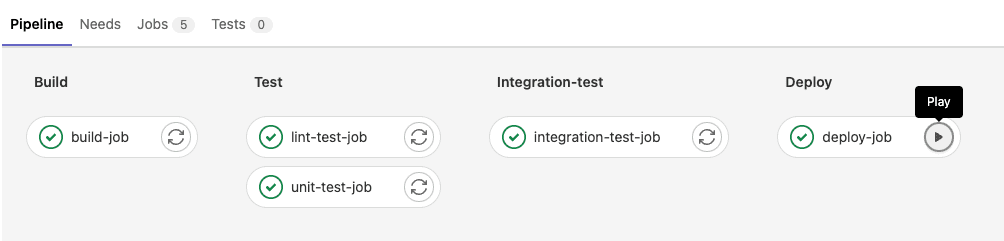

Create two stages named "integration-test" (staging) and "deploy" (production). Start with the staging stage first since the production stage is more or less a copy of staging.

Some tips on the way:

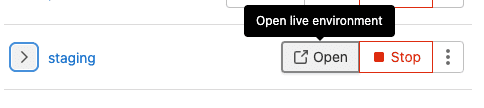

- Setting the "environment" to "staging" and providing a URL will make testing even easier.

- You need to make sure that the GitLab runner executing the pipeline has access to your private SSH key. GitLab has good information on how to do this.

- Pay attention to "Verifying the SSH host keys" in the documentation at GitLab. Your runner must trust your newly created servers. (see environment variables)

- If you created the pipeline from a template, you can lower the sleeps that simulate testing and linting.

Example project - .gitlab-ci.yml

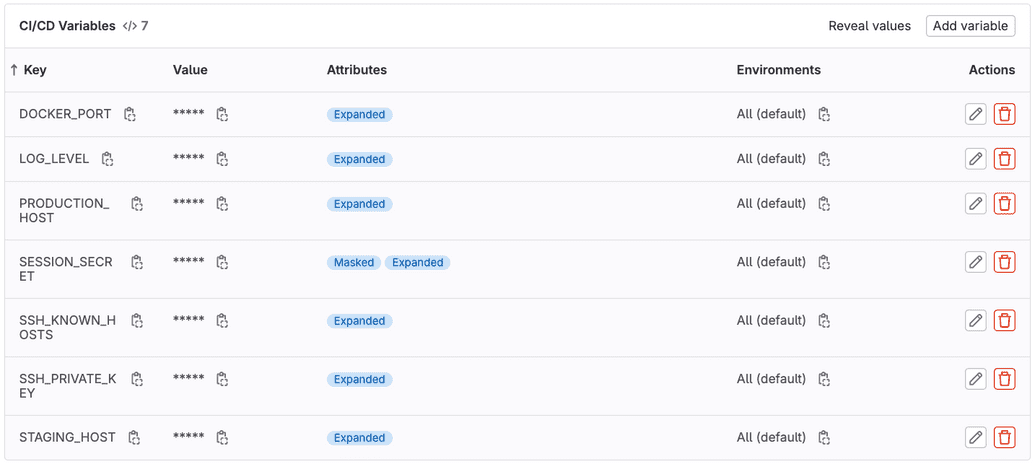

Pipeline environment variables

You need to set some environment variables on your GitLab project. (Settings -> Ci/CD -> Variables)

PRODUCTION_HOST: The IP-address of the production server.STAGING_HOST: The IP-address of the staging server.SSH_PRIVATE_KEY: The private key used to connection to the servers.SSH_KNOWN_HOSTS: The result ofssh-keyscanfor the servers.LOG_LEVER: How much to log.httpis normal, but you can also usesilent,silly,error,warn,notice,http,info,verbose,sillySESSION_SECRET: Used by NODE and gets built into the container.DOCKER_PORT: On which port to start Docker. (in our scripts, NGINX is mapping against 8080)BASE_URL: Are we deploying on a sub directory? If not, go with /.

3.2 Confirm staging

Time to confirm the functionality of the staging stage of the pipeline.

Start by validating the pipeline by entering the "CI/CD editor" (CI/CD->Editor) and clicking "validate". Here you can show a visualization of the pipeline and a merged version of your pipeline if you are importing parts of the pipeline from other places.

To run the pipeline you can either:

- Push a new commit to the project.

- Click "Run Pipeline" in CI/CD->Pipelines.

You can always enter a stage in the pipeline and look at the command-line output to troubleshoot.

Make a notable change in the CSS or a view file and wait for the pipeline to finish executing. Click Deployments->Environments to get the link to the application. Visit and confirm.

3.3 Deployment stage

If everything worked in the previous stage, you can copy the code to the Deployment stage and change which IP is being used by changing the environment variable used. You also need to add when: manual to make the deployment to production a manual step after you have confirmed and tested during the staging stage.

Wrapping up

You now have a CI/CD pipeline for your application. You can develop the application locally, and new versions are easily deployable.